Some time ago we needed new storage for our 2-node RAC cluster because of disk warnings on our NFS storage server. We use this cluster for performance load and stress testing only so there is no production involved. Rebuilding the cluster was an option but we preferred not to do so since Oracle has many features to maintain the RAC environment.

Plan is to move everything from DATA_OCR to new data group DATA_OCR_VD.

Check the current situation (root)

cat /etc/oracle/ocr.loc ocrconfig_loc=+DATA_OCR local_only=FALSE

At the end of this process ocrconfig_loc will be changed to the new DATA_OCR_2 data group.

# crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 76af9477a1e94f6bbfb1f6642f4ce544 (/u02/nfsdg/disk1) [DATA_OCR] 2. ONLINE a43e34a502754fdfbfc52bcd37b04588 (/u02/nfsdg/disk2) [DATA_OCR] 3. ONLINE 0690e8aa22164f17bfda3f3d2eb7db92 (/u02/nfsdg/disk3) [DATA_OCR]

ASM is using 3 voting disks for NORMAL REDUNDANCY.

In ASMCMD

set lines 300 select MOUNT_STATUS , NAME, PATH from v$asm_disk ; MOUNT STATUS NAME PATH ------------------------------------- CACHED DATA_KTB_0000 /u02/nfsdg/disk6 CACHED DATA_GIR_0000 /u02/nfsdg/disk5 CACHED DATA_OCR_0002 /u02/nfsdg/disk3 CACHED DATA_OCR_0000 /u02/nfsdg/disk1 CACHED DATA_LRK_0000 /u02/nfsdg/disk4 CACHED DATA_OCR_0001 /u02/nfsdg/disk2 CACHED FRA_0000 /u03/nfsdg/disk7 7 rows selected.

Results for the ocrcheck command:

# ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 3

Total space (kbytes) : 262120

Used space (kbytes) : 3072

Available space (kbytes) : 259048

ID : 982593816

Device/File Name : +DATA_OCR

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check bypassed due to non-privileged user</p>

The OCR looks ‘healthy’.

Make OCR backup

Check if you have OCR backups available:

# ocrconfig -showbackup</code> m-lrkdb2 06/22 12:20:50 .../11.2.0/grid_2/cdata/m-lrkdbacc/backup00.ocr m-lrkdb2 06/22 08:20:50 .../app/11.2.0/grid_2/cdata/m-lrkdbacc/backup01.ocr m-lrkdb2 06/22 04:20:49 .../app/11.2.0/grid_2/cdata/m-lrkdbacc/backup02.ocr m-lrkdb2 06/20 16:20:46 .../app/11.2.0/grid_2/cdata/m-lrkdbacc/day.ocr m-lrkdb1 06/09 02:16:17 .../app/11.2.0/grid_2/cdata/m-lrkdbacc/week.ocr PROT-25: Manual backups for the Oracle Cluster Registry are not available

Backup files are approximately 7Mb each.

You can make another manual backup(root):

# ocrconfig -manualbackup

If you need it, the command for restore of the OCR:

# ocrconfig -import[text]

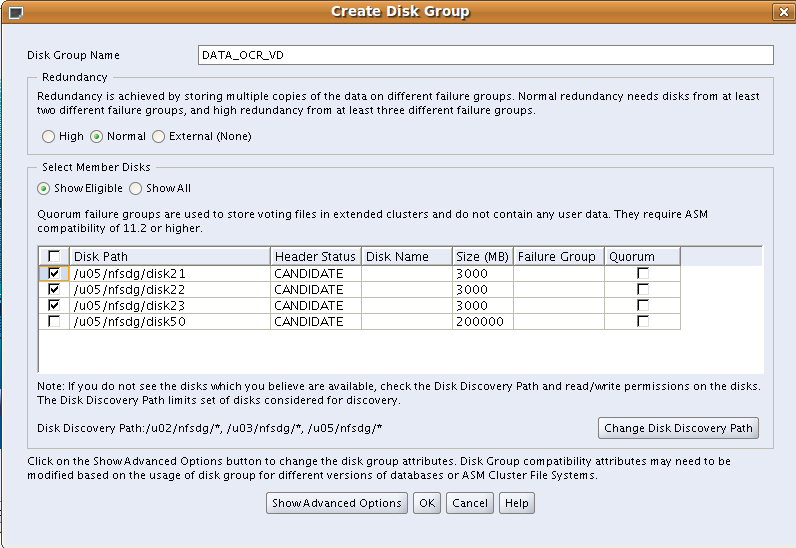

Add new OCR disk group

Create a new disk group for the OCR using ASMCA

SQL> select failgroup, name from v$asm_disk; FAILGROUP NAME ------------------------- ------------------------------ FRA_0000 FRA_0000 DATA_OCR_0000 DATA_OCR_0000 <-- old DATA_OCR_0001 DATA_OCR_0001 <-- old DATA_OCR_0002 DATA_OCR_0002 <-- old ... ... DATA_OCR_VD_0000 DATA_OCR_VD_0000 <-- new DATA_OCR_VD_0001 DATA_OCR_VD_0001 <-- new DATA_OCR_VD_0002 DATA_OCR_VD_0002 <-- new

Now we have a new disk group and we can start moving

- spfile(s)

- voting disks

- OCR

All three items will be moved to disk group DATA_OCR_VD.

Move spfile

In our configuration the spfile is located in the OCR and it should also move to the new disk group for the OCR:

In +ASM instance (user grid):

create pfile from spfile;

create spfile=’+DATA_OCR_VD’ from pfile;

After this step the value for parameter spfile is still set to the old location, so if you want to be sure the change is applied correct you can do a cluster node restart:

On node 1:

# crsctl stop cluster

# crsctl start cluster

In the ASM instance, as user grid:

SQL> show parameter spfile

NAME TYPE VALUE

———————————— ———–

spfile string +DATA_OCR_2/m-lrkdbacc/asmpara

meterfile/registry.253.7545848

21

Spfile is pointing to the new location on node 1.

node 2:

# crsctl stop cluster

# crsctl start cluster

SQL> show parameter sp